Advancing machine learning

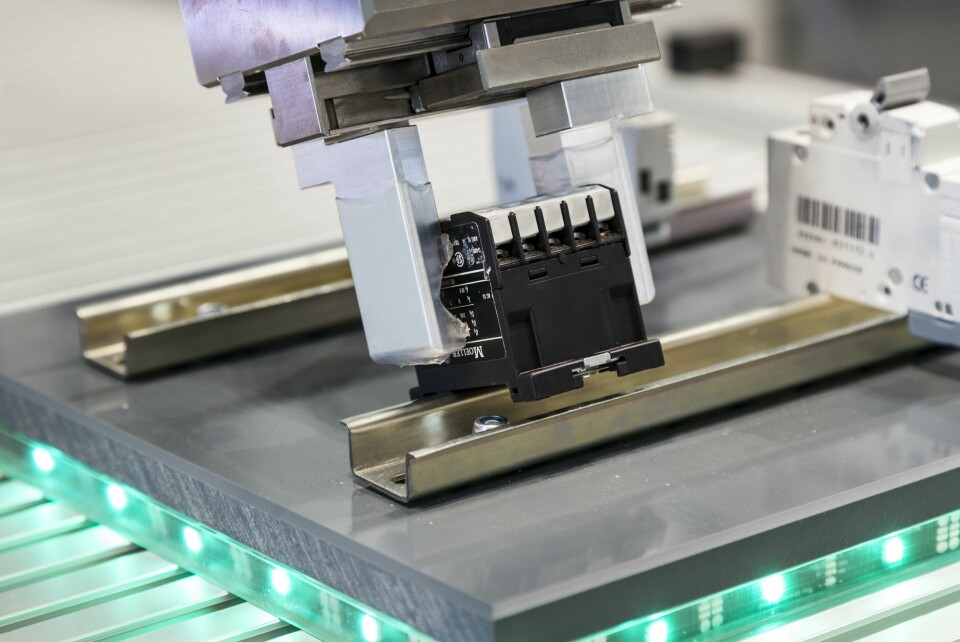

Assembly operations still offer a lot of potential for automation. A new project aims to take machine learning to the next level

Fraunhofer IPA, the Institute of Industrial Manufacturing and Management IFF at University of Stuttgart, are partnering in the ‘Rob-aKademI’ research project to simplify robot programming and make assembly tasks easier to automate. AMS spoke with IPA project leader Arik Lämmle to find out more about the technology and challenges.

AMS: The goal of the Rob-aKademI project is to simplify robot programming for automated assembly tasks. Could you provide some context as to the existing challenges in this area?

Arik Lämmle: What we are mainly, but not exclusively, focusing on is assembly automation. Even though there is a lot of potential for automation in those assembly tasks, the solutions we currently have do not meet the technical requirements to automate these tasks. A big challenge in this case is product variance.

What we see right now is that in regards to assembly, we are always a little bit behind because for many currently automated tasks like handling or even welding, position-based controls for robots – you give the robot a path and the robot follows this path – are enough.

But for assembly, if you only do this based on position, you’ll rarely succeed, you still need some fine-tuning. What comes into this problem is the dimension of force, and we need force control to support an assembly process I would say in at least 80% of the assembly tasks

From our experience the main challenge is the high variance and the need for production systems to adapt efficiently to these requirements. We need flexibility in the means of programming the robot and the program needs to adapt to any changes in the product, like uncertainties, tolerances or even a new variance.

AMS: The technology you are developing utilises a digital twin to simulate the physical environment. Could you offer insight into how and what the robot learns through this process?

AL: The main benefit of simulation environments or tools is you can test your system before it even physically exists. But if we think about learning, we speak of learning by trial-and-error, like in reinforcement learning, which is the field we apply.

We build up a digital twin in a physics simulation environment, but we only simulate and model the aspects of the world that are needed for training, we don’t try to develop a perfect world inside a simulation.

For example, in assembly, what we need are the robot dynamics, taking into consideration joint friction or gravitation effects, and also contact forces. These are the things we need to simulate. We put a lot of effort into fine-tuning this in the simulation to make the assembly process you want to learn, really accurate. So, the aim is to provide good training data to learn a good process.

And the biggest benefit of our approach is you give the robot the ability to learn each motion of the joint. In each stage where you were in a specific situation, the robot has endless possibilities to move.

In our approach we commonly use a concept of skills formalism named “pitasc” and developed at Fraunhofer IPA. This skill formalism will also control the robot hardware and you can apply this to any robot, and they take a lot of the complexity out of the learning process, because you don’t have these endless possibilities of the joint, but rather only the skill parameters which you want to learn. Of course, it’s also possible to transfer these skill parameters onto the real robot.

“What comes into this problem is the dimension of force, and we need force control to support an assembly process I would say in at least 80% of the assembly tasks”

AMS: You mentioned the reinforcement learning and using a trial-and-error process. So, can the robot build a model of what’s right and what’s wrong?

AL: Yes, let’s take an example. You have a part that can be easily damaged, such as a small plastic snap-fit onto your component, (electric terminals, etc.). If the allowed forces needed to fit these parts are exceeded, the snap-fit will break. If the robot breaks the part, or takes too long to complete the task we could provide a negative reward in each time step. In the end, the robot is not only meant to find a suitable solution, but also a fast solution for the process.

It’s one approach we thought about at the beginning. We can learn from success or not success, like a sparse reward, which is not really applicable in assembly because we have a continuous process. The deformation of the part inside the process does take a big role in the assembly itself, so we provide a shape reward function and we define steps alongside the process. And this is a concept we apply in these cases: telling the robot, yes, you’re getting closer to your goal, and closer and closer, and you’re not pushing too hard, the forces are limited – this is really key to the reinforcement learning.

AMS: You have developed these learning modes, could you just describe a little bit more about those?

AL: We thought about what could be interesting applications, using a step-by-step, approach. We have one dedicated learning module that takes the commonly used snap-fits into account. Another module is focusing on force-based control because, as we discussed, this is essential for applying robots in assembly, and the last one is how we embed cameras into the process.

As I mentioned before, most cases in assembly are based on deformation of parts, like the snap-fits, or processes like screw fixing – it’s minor, but still a deformation. Also placing, for example, or inserting, could be done based on force control, because the deformations are minor.

The force control module takes care of all these aspects of assembly where it’s pure insertion tasks and handling tasks. You still have many applications where deformation is key (snap-fits) and all these processes, have a very high potential for automation. But still, there are minor uncertainties, tolerances – the process still fails. So how can we model this inside a simulation?

An important element of this learning module is the extension of the simulator. We enable our rigid body simulation to approximate deformable components, which was initially not part of the simulation. With the process simulation, which goes into detail, it takes a lot of computational resources, it takes a long time to compute. You do not get enough data for training in a feasible time.

We try to find the balance between these challenges. We have fast simulations, on the one side, developed for gaming and animation, etc. But how do we bring deformation in? These learning modules contain pre-knowledge about these tasks. They contain several of the previously mentioned robot skills, some parameter ranges and also, mapping from CAD data to the specific skill.

For example: how do I know from the CAD data of my snap-fit, what are my maximum forces allowed, or how do I need to shape my reward function in order to fulfil this process? This is part of these learning modules. They bring a lot of knowledge, and encapsulate the complexity of setting everything up, providing all the transformations you need to make the process as easy as possible.

AMS: Another part of the project is Deep Picking. Could you offer some more insight into this?

AL: We also have partner projects focusing explicitly on deep picking, but it is definitely one of the parts where we’re also using a digital twin and learning technologies like deep learning or even reinforcement learning.

You can create almost unlimited possible combinations of parts inside a simulator. You just drop the parts inside the bin and then you have all the possible entanglements of the parts. And if you assume you have rigid parts, entangle them and find a good grasping spot, and trying, and let the robot again try it out. Simply try it out, over and over again.

This is something my colleagues are also working on. They simulate the box, they let parts drop in and then they generate, based on the simulated data, grasping positions. That’s how they find the best grasping for this particular part inside this particular situation. And using then advanced reinforcement learning or deep reinforcement learning techniques especially, enables us to generalise from these examples to unseen examples, to transfer this later on to a real robot.

AMS: Is the hardware going to become the limiting factor in realising the potential of the kind of programming you are developing?

AL: I would definitely say that hardware is still ahead of software in terms of robots. If you take a look at the kinematics of the robot, we have already reached our optimum. Of course, we can add more axis or sensors with a high resolution, or develop advanced grippers, which are capable of grasping ten thousands of parts. I think there is more to come from research in these fields, but right now the software is, unfortunately lagging behind.

So, I think our biggest challenge for automation and robot-based automation is to find suitable solutions to address the issues of mass personalisation and high variance production in high wage countries. If we want to keep our capacities here on the long-term.

Breaking this a down, how can we enable the robot to adapt to product variances, uncertainties and tolerances, on the fly, without needing to explicitly program them over and over again. If you think about one of the most famous definitions of machine learning, the ability of a computer to execute tasks without being explicitly programmed to do these tasks, then I think the connection becomes somehow clear.

AMS: What do you see as the key trends/developments/challenges in automation in the future?

AL: Explaining and gaining trust in artificial intelligence is key. So, not only developing the AI technologies, but also communicating the benefits and opportunities we gain from applying intelligent or cognitive systems. I think this is one of the challenges. We definitely need to focus also on gaining trust in AI by having explainable AI and not only AI. Think about business decisions. If you do not trust the algorithm behind it, how can you make a decision based on the algorithms’ prediction?

Read more about automation in the AMS Winter digital edition.